Front Matter

RedShred lets you use machine learning to reshape content from documents and other 2D media into a structured database.

Our platform is built around the three-step workflow: extract, enrich, and reshape. RedShred provides:

- flexible extractors to help you find the right content in your documents based on page layouts, page content, or any mix of the two.

- deep enrichments attach new, customizable metadata to segments. For example, object detection in embedded photos to entity recognition in text

- an API-first SaaS platform that treats your enriched content as a database, making it easy to take the new enrichment metadata and build compelling new experiences with it

RedShred keeps of all your content organized by its physical location in the documents as well as all of its enriched metadata. This lets you search, scan, and query your data power new intelligent applications by reshaping the knowledge that was found in those documents.

Our Model: Documents, Collections, Perspectives, and Segments

RedShred separates user-uploaded documents into collections. Users manage permissions at the collection level and also manage the configuration of enrichments. This means you’ll typically have documents from a single genre in a collection since they’ll be processed the same way. For example, you might have a collection of maintenance manuals, a collection of research papers, and a collection of ebooks.

We support a wide array of formats and seamlessly handle these at upload-time. This includes PDF, Microsoft Office (Word, PowerPoint), plaintext, and scanned images among others. If you have a format we don’t support, don’t hestitate to reach out to us at hello@redshred.com.

Perspectives and Segments

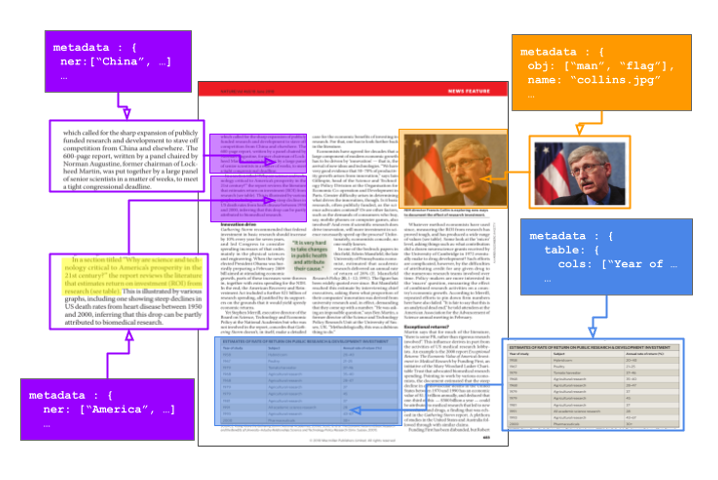

When documents are uploaded, they are queued to be read where they’ll be processed and made available from the API and from the web viewer. Once read, the metadata will be attached to segments on the documents. Segments are regions of the physical layout where items of interest were found. For example, a segment might be a paragraph of text and the enrichment data might be named entities from a state-of-the-art machine learning model, or it might be a list of peopls seen in a photograph on a page.

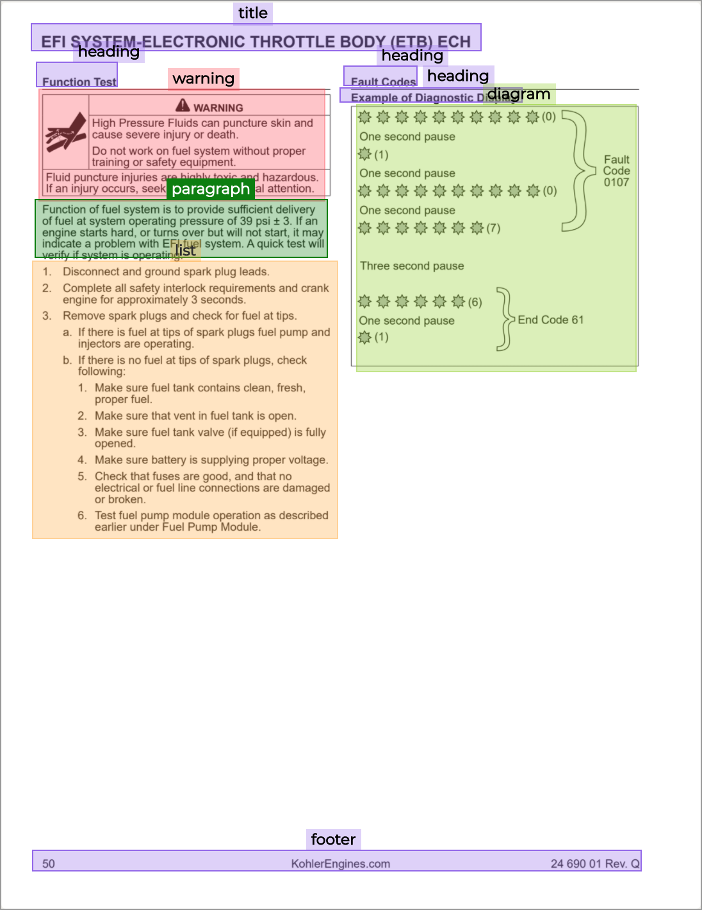

Segments are organized into perspectives. Perspectives form layers of segments on a document that represent a common kind of metadata. For example, you might run the techmanuals model on your maintenance manuals to see warnings, instruction lists, diagrams, and notes. Pages will be broken down based on these these types of segments. These types of segments are a kind of mental model that users may have when they look at a page. Another perspective might be mentions of tools and parts inside of paragraphs and lists.

Whenever you configure enrichments, all of the outputs from that configured enrichment are put into a shared perspective on the document. This lets you quickly filter and overlay multiple layers of metadata on a document at once. This lets multiple users look at documents from multiple viewpoints without interfering with each other: every perspective has its own segments and those metadata can happily live on the same region of space without colliding.

Turn the page and let’s get started using RedShred!